How I think about learning

The most important skill you can hone

I’ve spent hours trying to write a post on efficient language model inference and I couldn’t put something together that felt worth reading. I need to think some more before I can write something good on it.1

But there’s something, that I’ve spent a *lot* of time thinking about: how to learn effectively.

I’ll explain the following lessons with examples from my own journey with computer science, machine learning and the world of language models. But I’ve also applied these lessons (to some extent) to learn all sorts of things like how to ride a unicycle, write songs, snowboard, and do a backflip, just to name a few.

This is a collection of things that I’ve learned and that work for me. Your mileage might vary (more on this in lesson #5). I should also mention I haven’t read much on how to learn and these are based entirely on my experiences, so I might be re-inventing the wheel with some of these.

There are 5 independent-ish lessons:

Top-down and bottom-up learning

Learning should (only sometimes) be hard

Don’t worry about obsolescence

Train your gut, then follow it

Learn it your way

These insights, each to varying degrees, help answer the two fundamental questions when it comes to learning:

What should I learn?

How should I learn?

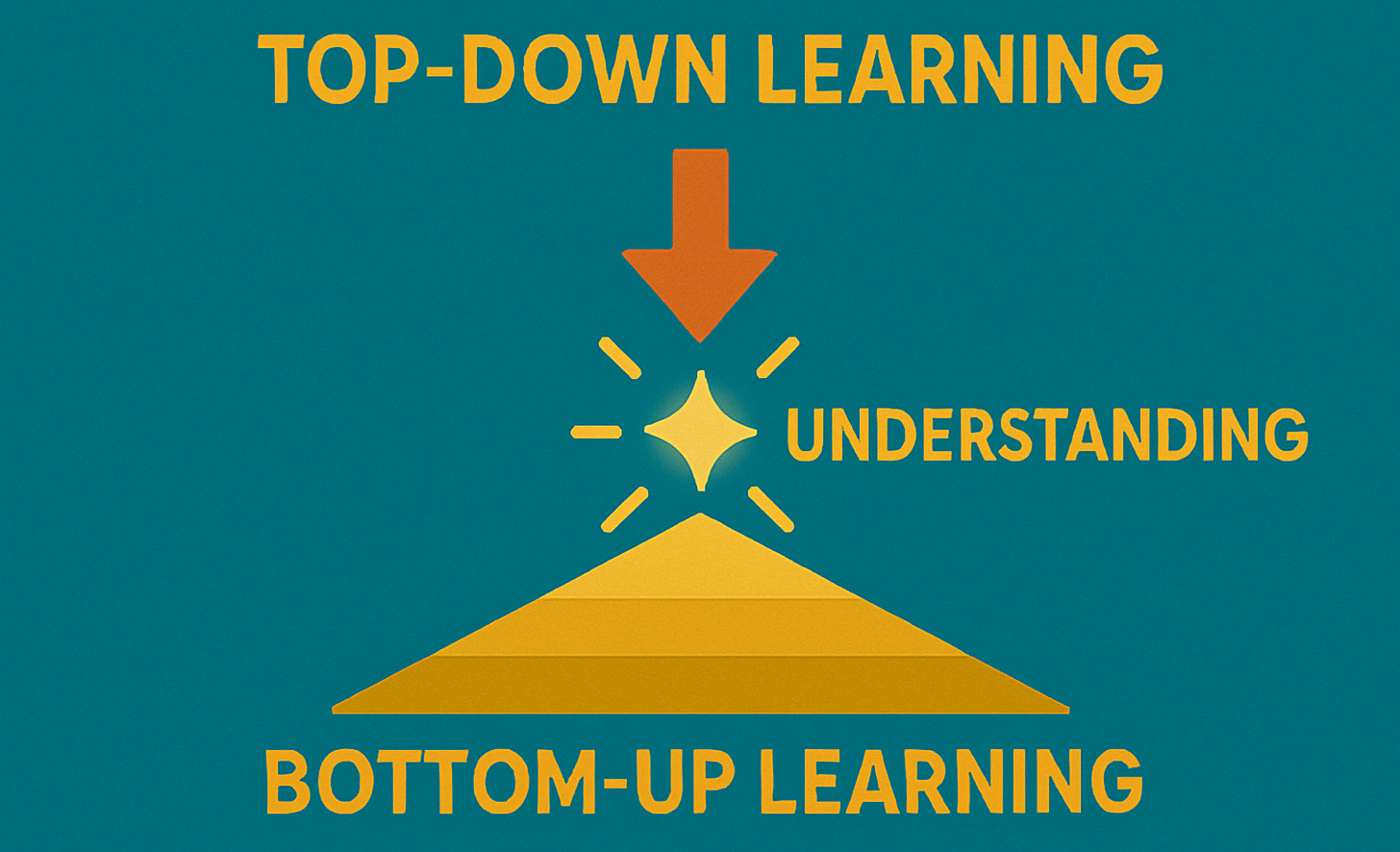

Top-down and bottom-up

There are two way to think about learning.

Bottom-up learning would start with the fundamentals and then build up to the eventual goal. This is how most schools operate: start with addition then multiplication then algebra then calculus.

Top-down learning would start with a high level goal and slowly uncover lower level details that need learned to achieve that goal. This is generally how on-the-job learning goes. You need to get something done, so you learn what you need to get it done.

Fundamental understanding happens when a top-down learning path collides with the foundation built from bottom-up learning. I think it’s super important to continue to do both even as you move on from undergrad and into more results-oriented work like a job or PhD program.

While the amount of top-down learning you need to do increases drastically once you need to accomplish things, I’ve found that continuing bottom-up approaches has been immensely helpful.

Being a “quick learner” is a huge asset to any line of work. But I don’t see people talk about how being a “quick learner” isn’t just some inherent thing you’re born with. It’s also a skill you can keep getting better at. And the way to get better at learning things quickly is to build a foundation as wide and tall as possible, so that when you need to learn how to do something you’ve never done before, your top-down learning path hits that foundation really quickly.

Learning should (only sometimes) be hard

No pain no gain, I’m afraid. Learning happens when your brain is under tension, the same way muscle growth happens when your muscles are under tension. Watching youtube videos is an awesome way to get introduced to a topic (and one of my favorite ways to learn), but deep understanding comes from sitting down with a pen and paper (or IDE) and really engaging with the material.

That said, with lifting as with learning, proper rest and keeping motivation up are also super important. I find that a balance of deep focus and light exposure when learning something new keeps me in a learning groove. In practice, my “light engagement activities” are watching youtube videos, listening to podcasts while I run or workout, and scrolling [AI research] twitter. My “deep focus activities” are trying to reimplement a paper or pulling down some open source repo and playing with it. This means trying to run stuff, extend it with new functionality, or asking Claude questions about what different parts of the code are doing.

This balances engagement with high-level ideas and low-level details of a topic. This balance keeps me motivated, and I find a natural gravitation towards topics where I want to engage with the details more. This is how I’ve discovered stuff I’m really interested in — through lots of work.

For me, passion always followed the hard work, not the other way around.

Don’t worry about obsolescence

I was talking to my friend the other day and he, slightly anxious, asks me, “What’s the most future-proof skill I should learn?”

It’s a natural question. What’s the point of learning something the next iteration of ChatGPT can do in seconds?

But it’s the wrong way to think about learning.

Ingenuity comes in many forms, but by far the most common one I see in AI research is clever interpolation and synthesis of ideas. That is, people taking idea #1 and idea #2 and combining them in some clever way to create something useful. Some of my favorite examples of this are grouped-query attention and hybrid attention/state space models, like griffin.

My point here is that this type of ingenuity benefits immensely from knowing lots of stuff regardless of how “cutting edge” it is. When the creators of paged attention learned about memory paging in their OS class, I’m sure they had no idea that this would be a key concept in building vLLM, the most widely used inference engine in the open-source community. What they didn’t do is go “OS’s already implement memory paging so there’s no point in learning this”

If you want to learn something, just learn it. You never know how you might use it. As for obsolescence, stay flexible and adapt to change and you’ll be just fine. Even very rapid revolutions like the current AI craze happen kinda slowly. ChatGPT was released 2 and a half years ago! A lot has changed in this field in the last 2.5 years, but not more than what a flexible person can keep up with.

Train your gut, then follow it

I don’t think for super long or very deeply when deciding what I should learn next. I act on instinct and whims for the most part. Part of the reason I’m able to trust my gut is I feel like I’ve honed in my intuition on things that’ll be fruitful or interesting to me. I know what I like.

That’s not to say you shouldn’t try new things! You absolutely should, especially if there’s lots of community interest on a topic (e.g. right now is a really fun time to learn RL for language models because everyone is talking about it).

I just mean that deciding what to learn doesn’t have to be a long thoughtful process. Train your gut with experience, then follow it.

Learn it your way

And of course, the obligatory “do what works for you” section. I wanted to make it clear that these lessons are not based on any science or research I’ve done on the topic, just things I’ve discovered from trying to learn lots of stuff. You should try things out and figure out what works for you. Make sure to measure progress in a principled way. Formal milestones are great, but even just reflecting once a week/month on the progress you’ve made (or haven’t) can be enough.

The last thing I’ll say is being efficient when learning is great, but don’t worry too much about optimizing your process. Doing something is better than doing nothing, and just because your process isn’t optimal doesn’t mean it’s not effective.

Bonus: 80-20 Principle

I wanted to put this in the section “Learning should (only sometimes) be hard”, but it didn’t fit neatly. Anyways, here’s the 80-20 principle:

When learning, you can get 80% of the understanding with 20% of the effort. That last bit of really understanding something is the hardest part. This suggests a learning strategy: hit that 80% understanding point with most of the things you learn, and only go for deeper understanding on the stuff you’re really into or is necessary for your work (these are ideally the same set of things, but life isn’t always ideal…)

Bonus: story on learning RL

This story was originally in the section “Top-down and bottom-up”

Since joining the post-training team at Ai2, I’ve needed to learn about reinforcement learning (RL) for language models. This is something I knew very little about when I started at Ai2. Here’s how I applied the top-down v. bottom-up framework:

GOAL: make our language models (OLMo and Tulu) good at coding 2

[TOP-DOWN]: I wanted to hit the ground running and start running experiments ASAP, so I first learned about how to launch RL runs with different settings in our post-training framework, open-instruct.

Open-instruct is very well-designed (kudos to Costa Huang and the rest of the team), so it wasn’t too difficult to get setup with RL experiments on code. Mission accomplished, right? Well, I still had tons of questions about what was actually happening when I ran an experiment. Like, what the hell is num_samples_per_prompt_rollout?

I started reading the section on policy gradient algorithms in Nathan Lambert’s RLHF Book. I was particularly interested in Group Relative Policy Optimization (GRPO) since that’s what everyone’s been talking about on Twitter lately. The book mentions how the key innovation of GRPO is how it estimates advantage, which is the difference between the Q function and the V function.

[BOTTOM-UP]: Ah, I remember the Q and V functions from an AI class I took in undergrad. GRPO estimates advantage by sampling many completions to a prompt. Together, these form a group. We take the normalized reward (subtract mean and divide by std deviation) as the advantage. Ohh, so num_samples_per_prompt_rollout is the size of the group by which we normalize rewards to estimate advantage.

This was the moment my top-down learning path collided with my foundation which was built up from previous bottom-up learning. I didn’t have to do any more research — I (more or less) understood how the GRPO algorithm works for language models. I learned something! But I didn’t stop there. I’m still working through the rest of the RLHF book, even though it’s not immediately relevant to my work. Today it’s GRPO, tomorrow it’ll be something else that everyone’s talking about. So I want to understand the background that got us here, so I can readily learn whatever the next thing is.

If you are interested in this, I’ll probably give it another go at some point. But in the meantime you should totally checkout Alex Zhang’s blog on efficient deep learning

I’ll write a blog post on why LM’s are so good at coding, but for context RL is an important part of this capability.

I like where you point out how light forms of learning can make a big difference. In my case, as someone starting with very little AI knowledge, I find that listening or reading things about ML get my brain going.

What's interesting is that seems to be interdisciplinary in nature